The Classical Approach For Probability

Introduction

The mathematical foundation of probability is rooted in the concept of sets. Consider an experiment where a set of potential outcomes exists. Before experimenting, the specific outcome remains unknown, and the possibilities are encapsulated within the set. For instance, envision tossing a coin—a simple experiment with two potential outcomes: heads or tails. Before the coin is tossed, the set of possibilities is {Heads, Tails}. This set represents all conceivable outcomes. The actual act of tossing the coin constitutes the experiment. While the coin is in mid-air, any outcome from the set could materialize. It is only after the coin lands that the uncertainty surrounding the outcome is resolved. The result then “crystallizes” into the actual outcome, whether it be Heads or Tails. In the language of sets and probability, the set {Heads, Tails} embodies all conceivable outcomes, and the probability associated with each outcome before the toss is

Sample spaces and Pebble World

Sample Space

In probability theory, a sample space is a fundamental concept representing the set of all possible outcomes of a particular experiment. It serves as the universe from which we draw potential results. Consider rolling a six-sided die; the sample space for this experiment would be {1, 2, 3, 4, 5, 6}, as these are all the possible outcomes.

Diving into Pebble World

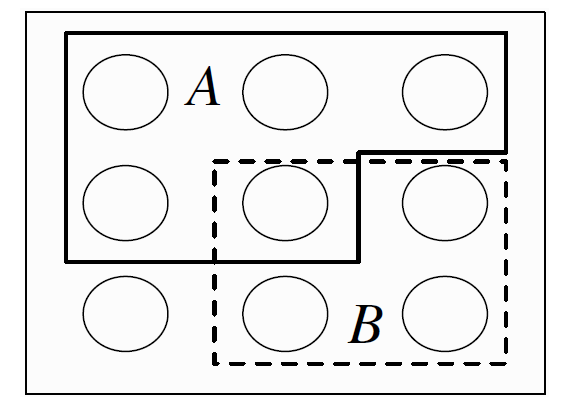

Now, let’s introduce the concept of the Pebble World as a metaphorical tool to understand probability. Imagine placing pebbles on different points within the sample space, where each pebble corresponds to a possible outcome. The density or number of pebbles on a specific outcome reflects the likelihood or probability of that outcome occurring. For instance, let’s return to the six-sided die. If the die is fair, each number from

Definition(Sample space and event): The sample space

The sample space of an experiment can be finite, countably infinite, or uncountably infinite.

Justification of Countable & Uncountable Sets

Mathematics Behind the Concept

A set may be finite or infinite. If

This is a form of the inclusion-exclusion. It says that to count how many elements are in the union of

Example

For example, suppose that we want to count the number of people in a movie theater with

Paradoxical Justification

This idea of looking at one-to-one correspondences makes sense both for finite and infinite sets. Consider the perfect squares

Hilbert’s Infinite Hotel Paradox

Another famous example of this is Hilbert’s hotel. For any hotel in the real world, the number of rooms is finite. If every room is occupied, there is no way to accommodate more guests, other than by cramming more people into already occupied rooms. Now consider an imaginary hotel with an infinite sequence of rooms, numbered

Can the hotel give the traveler a room, without leaving any of the current guests without a room?

Yes, one way is to have the guest in room

What if infinitely many travelers arrive at the same time, such that their cardinality is the same as that of the positive integers (so we can label the travelers as traveler 1, traveler 2, . . . )?

The hotel could fit them in one by one by repeating the above procedure over and over again, but it would take forever (infinitely many moves) to accommodate everyone, and it would be bad for business to make the current guests keep moving over and over again.

Can the room assignments be updated just once so that everyone has a room?

Yes, one way is to have the guest in room n move to room to

An infinite set is called countably infinite if it has the same cardinality as the set of all positive integers. A set is called countable if it is finite or countably infinite, and uncountable otherwise. The mathematician Cantor showed that not all infinite sets are the same size. In particular, the set of all real numbers is uncountable, as is any interval in the real line of positive length.

Numerical Problems

Experimenting amounts to randomly selecting one pebble. If all the pebbles are of the same mass, all the pebbles are equally likely to be chosen. This special case is the topic of our upcoming sessions. Set theory is very useful in probability since it provides a rich language for expressing and working with events. Set operations, especially unions, intersections, and complements, make it easy to build new events in terms of already-defined events. These concepts also let us express an event in more than one way; often, one expression for an event is much easier to work with than another expression for the same event.

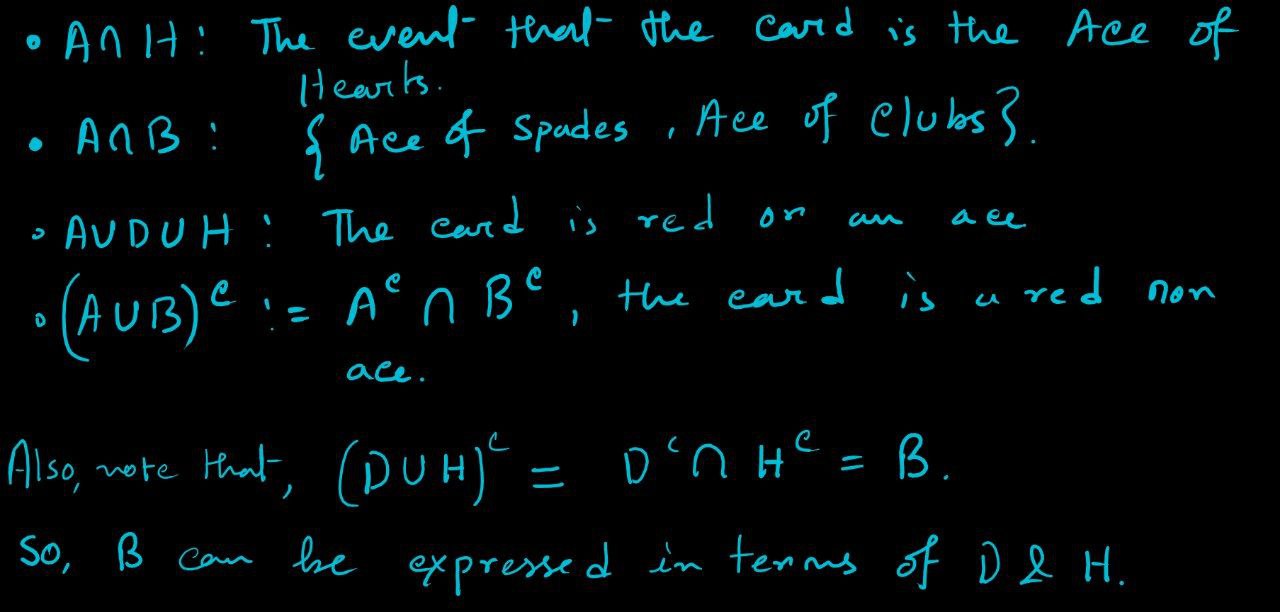

For example, let

since saying that it is not the case that at least one of

The notion of sample space is very general and abstract, so it is important to have some concrete examples in mind.

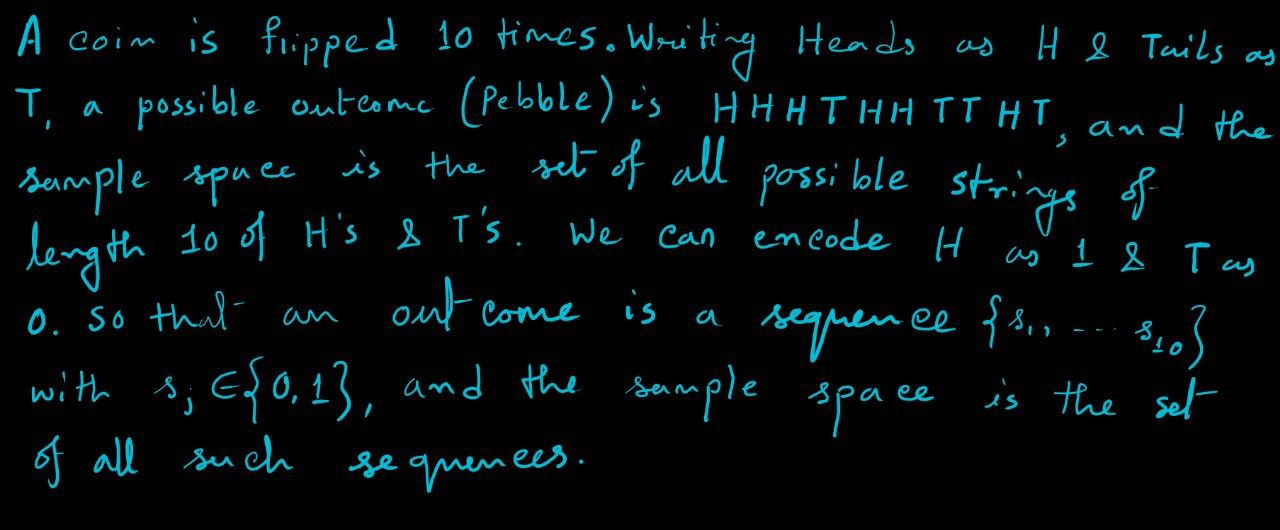

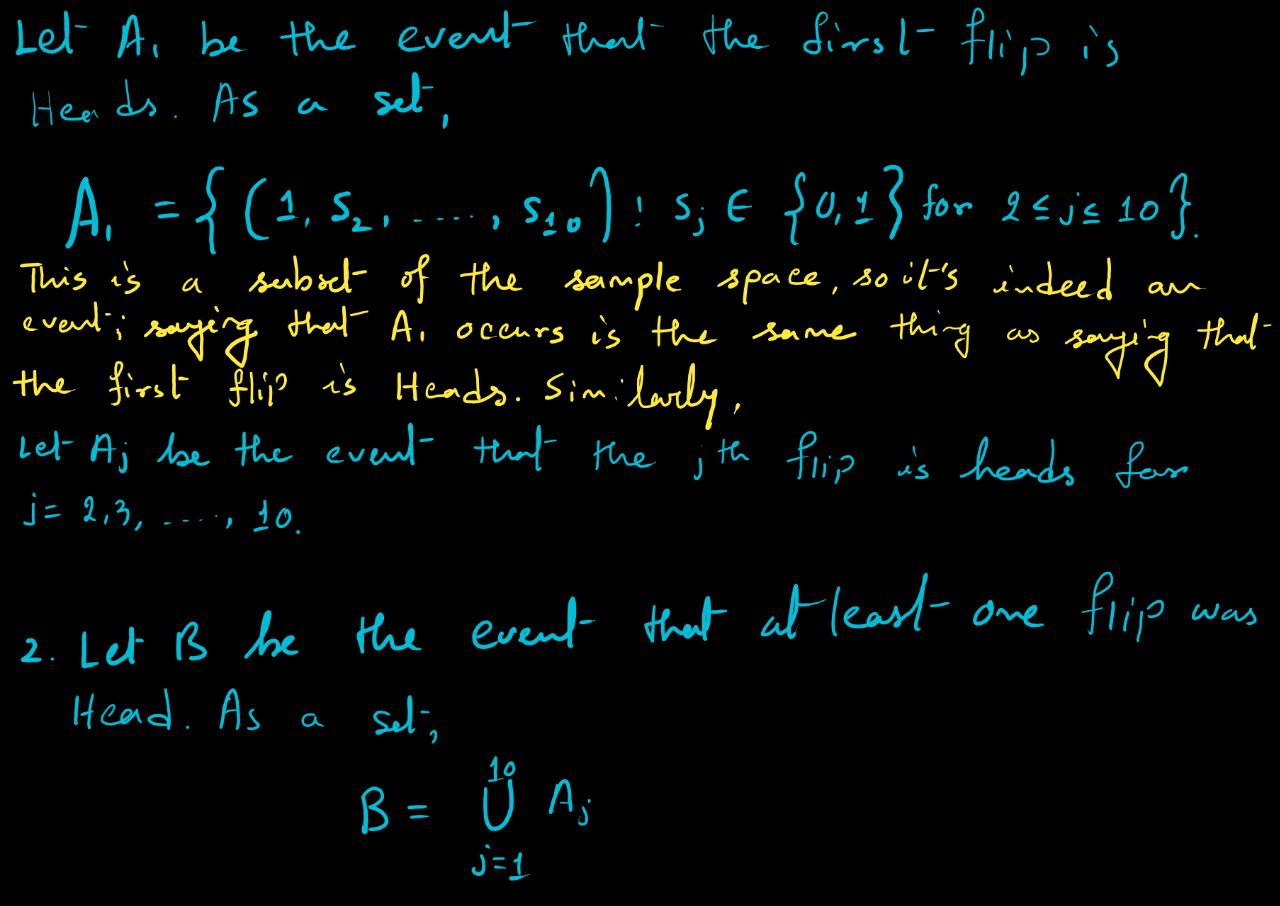

Coin Flip Example

.jpg)

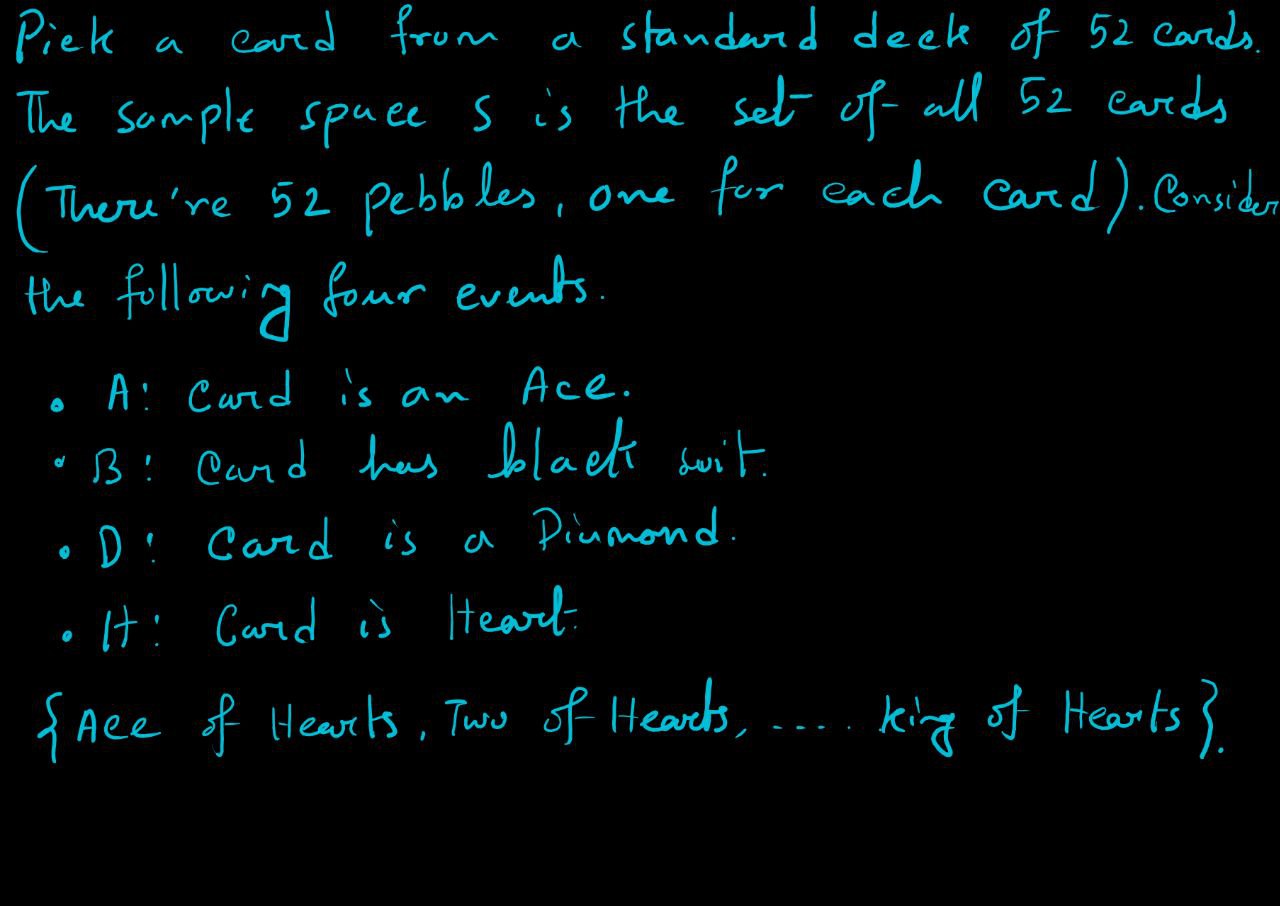

(Pick a Card, Any Card) Example

On the other hand, the event that the card is a spade can’t be written in terms of

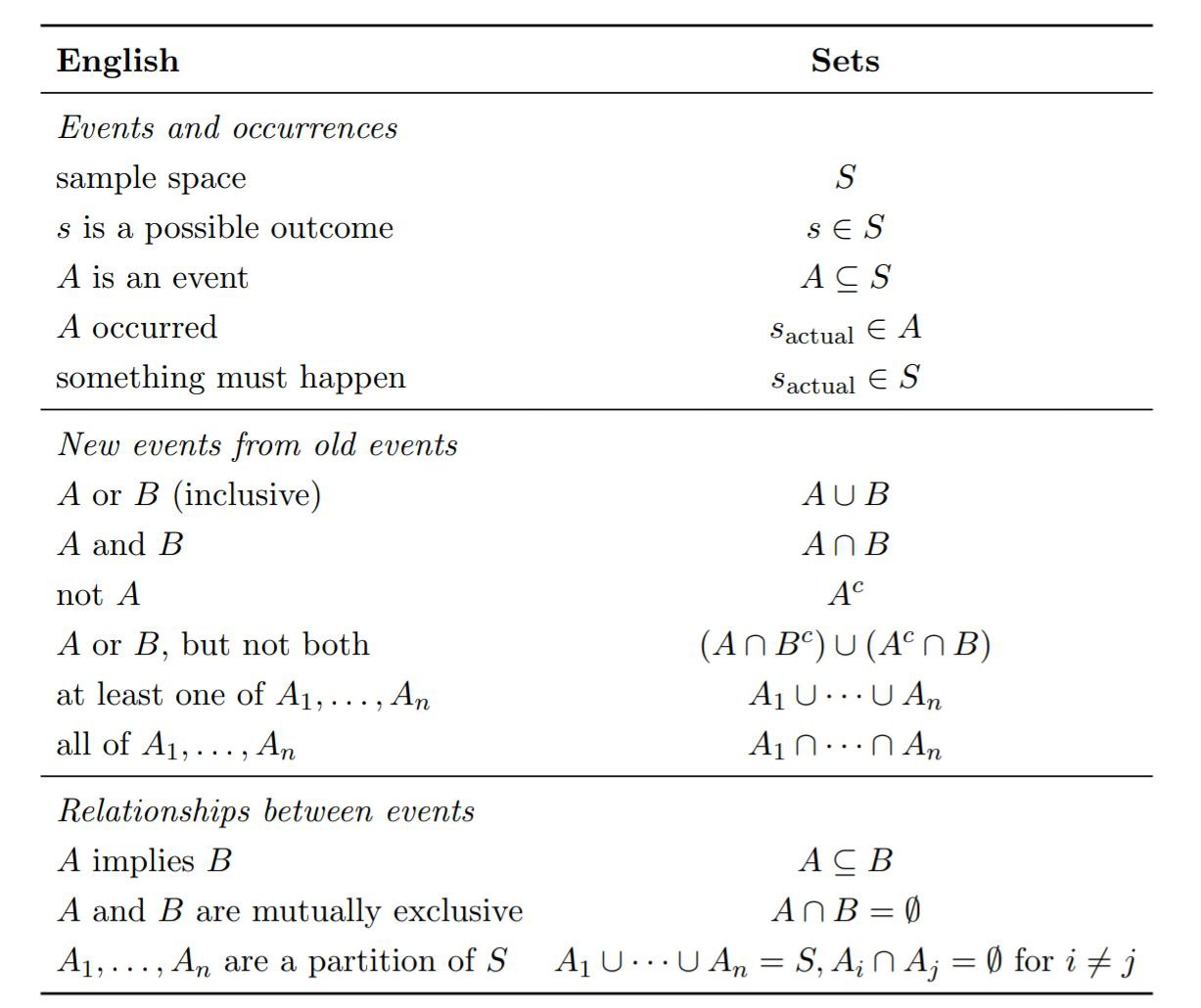

Convertion Between English and Sets

As the preceding examples demonstrate, events can be described in English or set notation. Sometimes the English description is easier to interpret while the set notation is easier to manipulate. Let

Naive Definition of Probability

Historically, the earliest definition of the probability of an event was to count the number of ways the event could happen and divide by the total number of possible outcomes for the experiment. We call this the naive definition since it is restrictive and relies on strong assumptions; nevertheless, it is important to understand, and useful when not misused.

Definition:

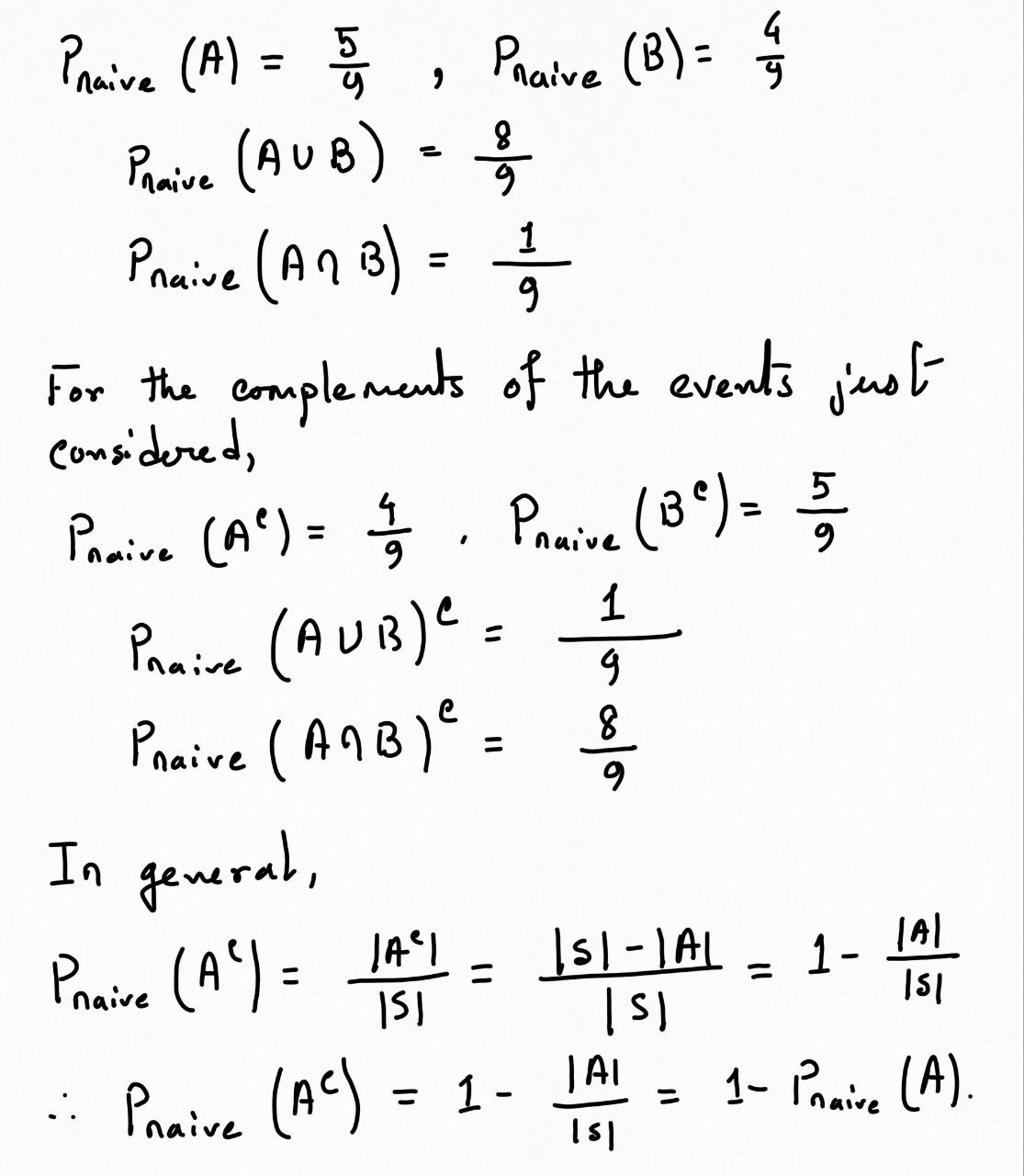

Naive definition of probability: Let

In terms of Pebble World, the naive definition just says that the probability of

A good strategy when trying to find the probability of an event is to start by thinking about whether it will be easier to find the probability of the event or the probability of its complement. The naive definition is very restrictive in that it requires

In addition to sometimes giving absurd probabilities, this type of reasoning isn’t even internally consistent. For example, it would say that the probability of life on Mars is

when there is symmetry in the problem that makes outcomes equally likely. It is common to assume that a coin has a 50% chance of landing Heads when tossed, due to the physical symmetry of the coin. For a standard, well-shuffled deck of cards, it is reasonable to assume that all orders are equally likely. There aren’t certain overeager cards that especially like to be near the top of the deck; any particular location in the deck is equally likely to house any of the

when the outcomes are equally likely by design. For example, consider conducting a survey of

when the naive definition serves as a useful null model. In this setting, we assume that the naive definition applies just to see what predictions it would yield, and then we can compare observed data with predicted values to assess whether the hypothesis of equally likely outcomes is tenable.

Remarks:

See Diaconis, Holmes, and Montgomery [1]for a physical argument that the chance of a tossed coin coming up the way it started is about

Lecture Videos

See Also

References

Persi Diaconis. The Markov chain Monte Carlo revolution. Bulletin of the American Mathematical Society, 46(2):179–205, 2009.

Andrew Gelman, John B. Carlin, Hal S. Stern, David B. Dunson, Aki Vehtari, and Donald B. Rubin. Bayesian Data Analysis. CRC Press, 2013.